Building a World Where Anyone can Use Imaging Sensors

Co-innovation between Sony Semiconductor Solutions

and Microsoft AI & IoT Insider Lab

In this, the seventh installment of our Sessions series, we would like to introduce you to a “co-innovation” effort between Sony Semiconductor Solutions (SSS), a semiconductor company specializing in imaging and sensing technologies, including the intelligent vison sensor, and Microsoft, a company known for its cloud computing technologies, in the development of AITRIOS™, a new AI platform harnessing SSS’s sensing technologies. The two companies are working together at the AI Co-Innovation Lab, which is helping partners easily build solutions using AI-based vision sensor, for making everyone’s life and work easier through the advancement of both edge and cloud technologies. In addition, both parties are collaborating on finding new approaches to create rules of AI ethics. We spoke with Jun Yamasaki, who runs the AI Co-Innovation Lab at Microsoft, and Shota Watanabe, business development manager for AITRIOS at Sony Semiconductor Solutions America.

What is AITRIOS?

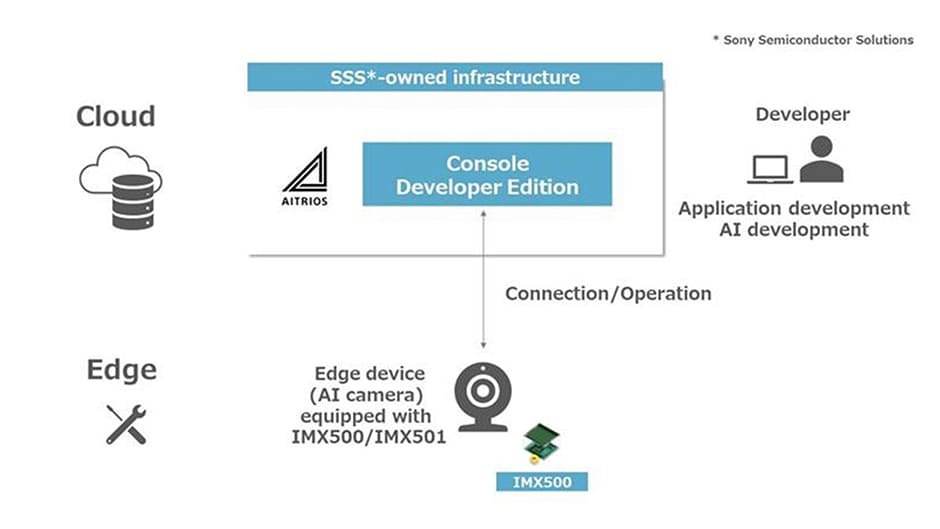

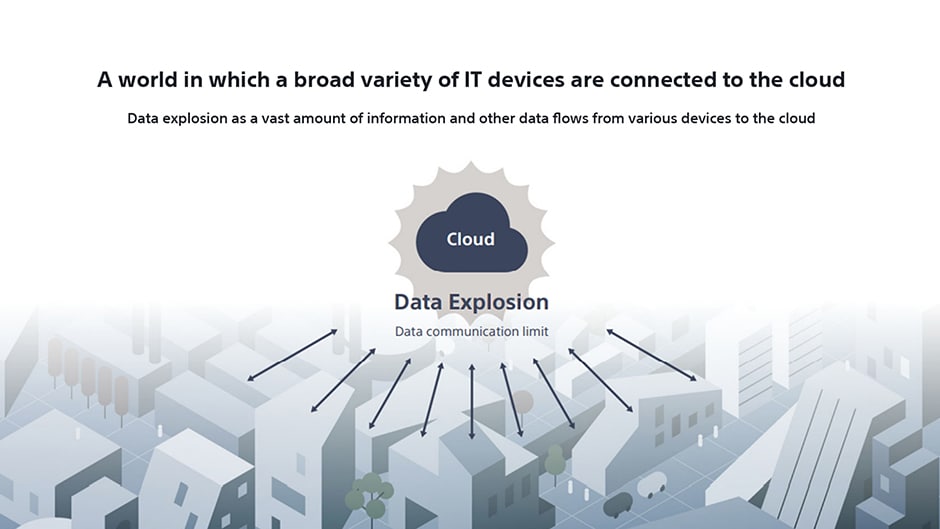

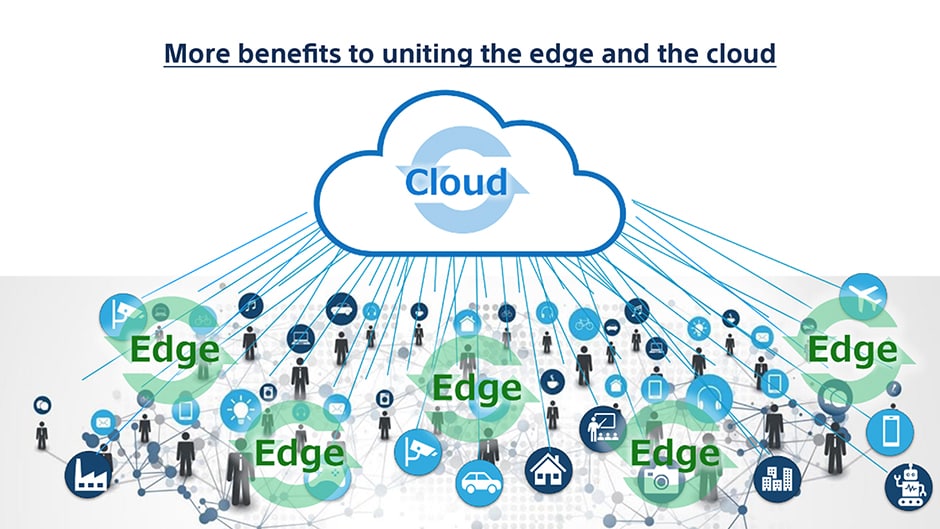

AITRIOS is a new edge AI sensing platform currently developed by SSS. As the number of devices connected to IoT grow*, we also see a significant increase in data amounts and processing workload when processing various types of data in the cloud, which leads to increased latency in communicating, cost for processing and analyzing data, higher power consumption, and increased risks to privacy and security. AITRIOS aims to solve these problems through the use of edge AI (intelligent systems in local devices such as cameras, sensors that process primary input data at the device and extracts only necessary insight before sending it to the cloud, rather than processing everything in the cloud). Edge AI and cloud technologies can be harmonized through AITRIOS, which has been designed to support the large-scale deployment of edge AI enabled solutions developed by various types of partners. AITRIOS aims to enable new value and applications in across a variety of industries and use cases, by contributing to resolve the problems they face.

- *IoT = Internet of Thing "Internet of Things", where various objects are connected to the Internet to enable information exchange.

PROFILE

Co-Innovation Partner

Jun Yamasaki

Manager, AI Business Development

Head of AI Co-innovation lab

Microsoft Corporation

Shota Watanabe

Business Development Manager, AITRIOS

Sony Semiconductor Solutions America

What is the AI Co-Innovation Lab?

How does the AI Co-Innovation Lab bring together AI and IoT experts from Sony and Microsoft?

Yamasaki: We built AI Co-Innovation Lab as a collaboration space between Sony and Microsoft, to make it a place to engage with clients, where three parties are working together to build systems for the clients’ needs.

On top of combining Sony’s IMX500, AITRIOS, and other technologies with our Azure platform, we definitely would need our clients’ cases. We discussed with Sony and realized that what we need is a place for three parties working together. So, the AI Co-Innovation Lab was started and now we have that place to bring all three parties together to come up with solutions.

Watanabe: It is really exciting - to have Sony’s engineers, Microsoft’s engineers and those from clients in a same room. Discussions always go productive and efficiently toward what the client wants with experts on edge and cloud both available in the room.

An Imagery and AI Platform for Everyone

What is the current state of and what challenges are the world’s leading technology companies facing in utilizing these technologies?

Watanabe: I think there are a lot of so called IoT applications, such as connecting temperature sensors and other devices to the cloud, analyzing, and monitoring the assets. We’re looking at enabling various new applications that no one has seen by making our imaging sensors IoT-enabled as well. However, those data captured by an image sensor is very large and unstructured data by itself. Simply speaking it’s an array of brightness value each and every pixel among millions of them. The problem comes from the fact that if you simply send all of them to the cloud and from thousands of devices at the same time, the network and cloud workload will be quite overwhelming.

Yamasaki: As for the cases that we have experienced in the last few years, many clients want to send data from engines to the cloud, process more various data in real time, including vibration, voltage and other data as well as video, and use that data in their own cases. On the other hand, we also hear that there is the problem that they are required to send various types of data, which further burdens the network.

Watanabe: Yes, and that’s why we think it would be a better idea to extract only the most essential information as close to the data source as possible, and then aggregate that data – now a lot smaller than before – to the cloud. For example, imagine you have video stream, in the case of videos, the data will be very large if there is a mix of information that is truly necessary and information that is unnecessary for the specific application. So, what would be ideal is if we could extract only the truly essential information from that batch of image data using an edge application, and then link only those to the cloud.

Yamasaki: Yeah, video data can be huge. If we try to send all the video taken by a camera to the cloud to be processed, we lose the ability to do it in real time. I actually used to work in the film industry, and if we want to use some kind of AI processing on the video we took, we had to upload it all to the cloud. We couldn’t do that in real time, and I’ve always wanted to figure out how to solve that problem.

A Platform that Lets Anyone Process Imagery with AI

We also spoke to the two men about SSS’s AITRIOS, which is an edge AI sensing platform, and Microsoft’s Azure.

Watanabe: We are currently working on a edge-AI sensing platform called AITRIOS. AITRIOS is a platform enables to connect image sensors and other sensors to the cloud as like IoT devices, which designed to be able to handle truly diverse and vaious cases. If you try to do the same all by yourself you have to start building a engineering team who can do image processing and embedded SW development, AI development, and also has all the cloud network skills. Our goal with AITRIOS is to build a platform which lowers the technological barriers to utilize IoT devices and enables financially viable solution so anyone can use the cloud-based edge AI image processing tailored to the use cases that they work on.

Yamasaki: We are building the Microsoft Cloud, and we have the same sort of goal as AITRIOS.

The Azure service provides users many beneficial tools to create their own use cases, while adding intelligent processing to data received from the edge, keeping the environment as low-code/no-code as possible. Azure provides many services, including AI, visual-based services, and speech-based services.

Watanabe: I think it might have been Microsoft or such cloud vendor who brought up the concept of “edge,” in contrast to cloud processing but there has been a gradual shift from a system that everything are processing in the cloud to a more local edge processing, enabling a design that allows for more distributed processing on a larger scale.

Yamasaki: Image processing puts a huge burden on networks by sending large amounts of data from cameras to the cloud, which doesn’t allow you to keep it real-time. So, instead of uploading everything to the cloud, you could start processing it in the step before it gets sent, what Microsoft calls the “intelligent edge” and what Sony calls “edge AI.” Imagine an actual store that is installed with tens or hundreds or thousands of cameras. The data from those cameras is partially processed in the store, and the results of the processing are uploaded to the cloud. That part of the system that does the processing before the data is uploaded to the cloud is what we call the “edge.”

Finding Use Cases that Produce New Value

We talked to the two about specific examples of how the AI Co-Innovation Lab is being used in the retail and smart building fields.

Watanabe: Some of the retail cases the lab has worked on so far include one solution for retailers to monitor shelves to know when re-stocking is needed, and another on how to control air conditioning in the meeting rooms by monitoring the number of people inside with the aim of reducing CO2 emissions. Using cameras, you can check shelves to make sure they are fully stocked or check whether the planned products are put in the planned positions. That will help employees minimize the number of times they need to re-stock shelves, and it can help minimize potential sales losses because someone forgot to re-stock.

Yamasaki: That’s right. Even in the retail case, some of the clients wanted to do processing on the edge side and were wondering how to create new solutions while concerned about privacy support and infrastructure cost. We had amazing results with that air conditioning case – we could contribute to reduction of the energy and CO2 emissions That use case is one that we at Microsoft are really proud of.

Yamasaki: You had one case with a building management client who wanted to solve an issue with an air conditioner running all the time even when no one was in a meeting room.

Watanabe: In that case, the camera equipped with IMX500 checks the number of people in the room, not who they are, and just sends that number to Azure. Our solution was to have the air conditioning controlled on the Azure side, where it would switch the system on or off depending changing conditions throughout the day, e.g. the number of people and the time of day. It was no waste use of energy because it controlled the air conditioning based upon whether there were people in the room, so it only turned on when it was needed.

Yamasaki: That case really left a big impression on me because it was able to tackle challenge of dealing with COVID-19 and sustainability agenda in a time of global warming.

Prioritizing Privacy Considerations: How to Use AI

What were some of the challenges Sony and Microsoft have faced in running the AI Co-Innovation Lab?

Yamasaki: When we first started talking about this partnership with Sony, I thought the concept was interesting. I think the most challenging thing was figuring out how to handle video privacy data coming from the cameras.

Watanabe: It took quite a lot of time to bring the team of experts from Microsoft and the team of experts from Sony together to create rules on how the Lab would operate. Our discussions about what advice and information we would give clients took quite a long time, but I’m glad we did that beforehand so we would have a base to work from. Microsoft and Sony have been committed to AI ethics for a long time, and so I think it’s really meaningful that we have teams of experts from both companies working together on this.

Yamasaki: I remember having some really heated discussions every week, but that experience is why the Lab is now so amazing.

Watanabe: I’d like to speak on “AI ethics”. We always think no one is made to feel uncomfortable or unpleasant when using AI-based technology is the most important. We thought through this topic very hard. I think with applications using cameras or other types of visual technology, people worry about their privacy, and that is a major element of AI ethics, a hurdle that needs to be cleared. For our part, we want to ensure privacy by processing data on edge, and I think it was good that we were able to tackle the challenge with a two-pronged approach: technical measures to ensure privacy and operational AI ethics rulemaking together.

Yamasaki: I think it is an amazing partnership.

The Potential of Vision Sensors

What do Sony and Microsoft expect from each other?

Watanabe: I think at Sony we have a log of products and experiences on the edge side – our strengths lie in devices. While Microsoft is also involved with devices, but I think its strengths can be found in cloud. Our partnership manages to combine the strengths of the two companies in a complementary way. In the future, more and more IoT devices will be sending data extracted on the edge to the cloud. When that happens, we need to be able to analyze a vast amount of useful information correctly and conveniently, and have users be able to use it. Whether its tens or hundreds or millions or billions of units, I would like to make AITRIOS into a platform that can handle any requirement, any request, and any use case.

Yamasaki: Microsoft’s expectations for AITRIOS are that more sensors will be made, and they will be used in Microsoft’s AZURE cloud via AITRIOS to collect and handle various data to build solutions that meet client needs. I hope we can continue to work together in doing this. I would like to keep co-creating with Sony and using new technologies to come up with new solutions that have never been seen before.

What does “co-innovation” mean to Sony?

We asked the AITRIOS team at SSS to talk about their “co-creation” with the Microsoft lab is going.

Watanabe: Well, just as we named the lab the Co-Innovation Lab, I think that Sony’s strengths in edge applications and devices can be combined with Microsoft’s strengths in cloud platforms and applications to create “co-innovation” hand-in-hand with our clients.

Thank you.