Collaboration

Expanding the world with the power of sound Create innovative real-time interactions

Jun 19, 2020

-

1. Kazuyuki Yamamoto

Tokyo Laboratory 08

R&D Center

Sony Corporation -

2. Takashi Kinoshita

Tokyo Laboratory 08

R&D Center

Sony Corporation -

3. Hiroshi Ito

Sound Wearable Preparation Office,

V&S Business Group

Sony Home Entertainment

& Sound Products Inc. -

4. Takashi Kawakami

Department 5,

V&S Software Technology Division,

HES Software Center

Sony Home Entertainment

& Sound Products Inc.

-

5. Hirohito Kondo

Sound Wearable Preparation Office,

V&S Business Group

Sony Home Entertainment

& Sound Products Inc. -

6. Izumi Yagi

Sound Wearable Preparation Office,

V&S Business Group

Sony Home Entertainment

& Sound Products Inc. -

7. Tsutomu Fuzawa

Creative Service Department,

JAPAN Studio,

Worldwide Studios

Sony Interactive

Entertainment Inc. -

8. Yoshihisa Ideguchi

Strategic Marketing Division

Sony Music Solutions Inc.

In addition to today's smartphones, the advent of devices that directly transmit information to the senses of hearing and sight, such as hearable devices or eyeglass-type wearable devices, is making a world where we are constantly connected to networks a reality. Along with changes in the social infrastructure, the relationship between people, devices, and information has changed, and the way in which interactions have been conducted is entering a period of great change. The Sound AR™ Project was launched to open up a new way for the future of these wearables. The creation of innovative real-time interactions was essential to the core technology. We asked the engineers involved in this project about how the project started, what the Sound AR technology is, and their efforts to create experiential content and sounds.

Period of interaction change

──How are interactions changing now and how will they evolve in the future?

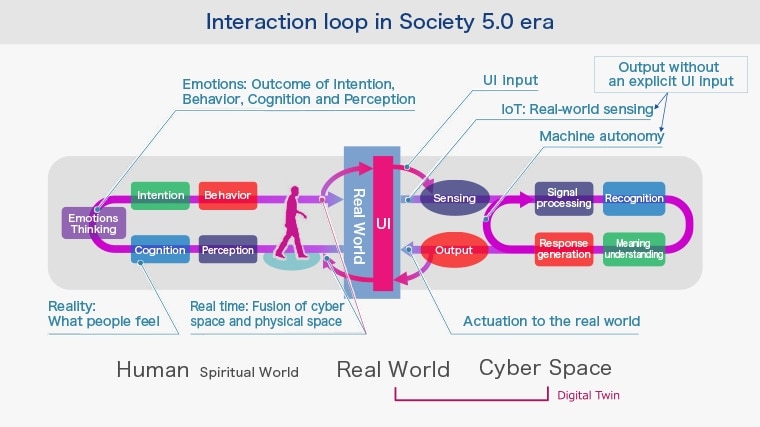

Kazuyuki Yamamoto:In the past, the interaction involved pressing a button or touching a panel to operate an application and receive information back from the display or speaker. However, as the social infrastructures and interfaces begin to change, devices and interactions are dramatically changing. Instead of touch operations, gestures and other types of physical input are used for AR (augmented reality) and VR (virtual reality) devices, objects are sensed with IoT devices, and AI (artificial intelligence) is used to have autonomous thinking and output to humans even without clear input. All new interactions that are completely different from the conventional input and output operations are starting to emerge. We used to simply press a button to get a feedback, but the world we have had for a long time will completely change to a world with a variety of combinations of "humans" vs. "machine world" or "information world." We think there will be a big game change for interaction.

Takashi Kawakami:I’ve been working on smart products with interactive features, and one of the questions I’ve been seeking answers for is whether dialogue or conversation is really necessary. For example, many people coming from overseas to Japan will be surprised to see Japanese people easily communicate simply by saying it or that without indicating specific things. If you say, "Please bring it," your friend or colleague soon understands and brings exactly what you want. This is, so to speak, implicit communication. This type of communication costs less and is very comfortable for some users.

Yamamoto:Talking to a smart speaker and getting a response is basically the same interaction you get when you press a button. It is the same UI (user interface) as it was 100 years ago. But in the future, the quality of the interaction will change depending on how well devices can understand a user's situation and intent. I think that the interaction of users asking questions and getting answers will continue, but there are many situations where it is difficult to use that kind of interaction. For example, some data shows that users of an AI assistant find it hard to use it outside their homes because it is uncomfortable to talk to it in front of people. If we don't step forward from the conversational communication, we might have limited opportunities to take advantage of AI.

Hiroshi Ito:Some of us stop thinking simply by concluding that an AI assistance can do everything. Of course, there are some areas where AI assistants can do, but Sony has many strengths in devices, sensing technology, and spatial audio technology, so I think there is a way to create a new experience by coordinating them well with AI. For example, if a smartphone or headphone can use its built-in sensors to better understand the user's current situation, it can give necessary information automatically to the user before asking AI. I think it's possible to offer a more pleasant experience to users so that they can have necessary information at necessary times naturally.

Yamamoto:A conversation is just a piece of interaction, and talking to a device is a temporary state. I think there should be a more natural state.

Achieve a balance on biased information acquisition

──What is the more natural state of information acquisition?

Kawakami:When I see people looking at their smartphones while walking on the street, I get the impression that "information world" is eroding the "real world." It is said that the next-generation communication 5G can handle data that exceeds 10 Gbps, but the receiving side cannot handle 10 Gbps. In other words, people in the real world are overwhelmed by the amount of information. Since we receive an overwhelming amount of information beyond the processing capacity, we need to look at smartphones even while walking on the street to keep up with it. As a solution to this social issue, we started the Sound AR Project in 2019 to realize a new AR experience where users can get information through sounds based on the situation that devices understand.

Ito:Today, we have too much information, and I think the balance between real life and information is getting worse for users. We often see many cases where people are distracted by smartphones and real communication between people is disrupted. However, it is possible to use sound, while at the same time better inserting information, to give priority to the real world, which we feel is a new opportunity that the Sound AR Project can create.

Kawakami:I think the Walkman is the first wearable device that enhanced the real world by enabling music to be carried around. Currently, the most popular way to extend reality is using vision, but hearing is suitable to easily extend reality because of a small amount of data and ability to process various sounds in parallel. By directing vision, which gains lots of information, to the "real world" and leaving the information of the "information world" to hearing, we may be able to restore the biased balance to a little more real world. In other words, we would like to say, "Take your eyes off your smartphone and take a closer look at the real world."

Ito:New possibilities focusing on sound and sense of hearing cannot be realized without offering concrete experiences, so we are currently trying various devices in the Sound AR Project. One example is the demonstration in Moominvalley Park.

A new entertainment experience with Sound AR

──What is the Sound AR Project?

Hirohito Kondo:Sound AR is based on the concept of "releasing from the smartphone screen" and combines real world sounds with virtual world sounds to provide an AR experience in hearing. This project was created by unifying activities that were originally started to work on sound AR in various departments within Sony. We believed that a combination of technology and entertainment is essential for realizing the AR experience of sound, and asked engineers from various fields to participate in this project in order to bring together the strengths of Sony. For the purpose of offering this new sound experience and performing business verification, we produced a sound attraction called "Sound Walk—Moominvalley Winter" in Moominvalley Park, which opened in 2019 in Hanno City, Saitama Prefecture.

©Moomin Characters ™

Izumi Yagi:The Moominvalley Park is a wonderful theme park with a lot of nature, and I thought that it would be possible to further improve the entertainability of the place by arranging various sounds, such as voices of characters, background music, environmental sounds, and sound effects that are linked to user movements, in a layered manner. The generated sounds are mixed with the real world through the Sound AR experiment and they tell the world of the original novel "Moominvalley Winter," which is the story of Moomintroll suddenly waking up from hibernation and going on adventures in the world of snow for the first time. When a user walks around the park wearing Sony's open-ear stereo headset STH40D and an Xperia™ smartphone, the sound of footsteps comes from the earphones as if the user were walking on snow. In the park, virtual Moomin characters come close and talk, guiding the user deeper into the Moomin world. The sound linked to the user's position and action is played back, and the user can enjoy the Sound AR experience while walking through the world of Moomin. With Sound AR, we are able to recreate a story using the entire park as a set and produce an attraction using a place that was not used for attractions before.

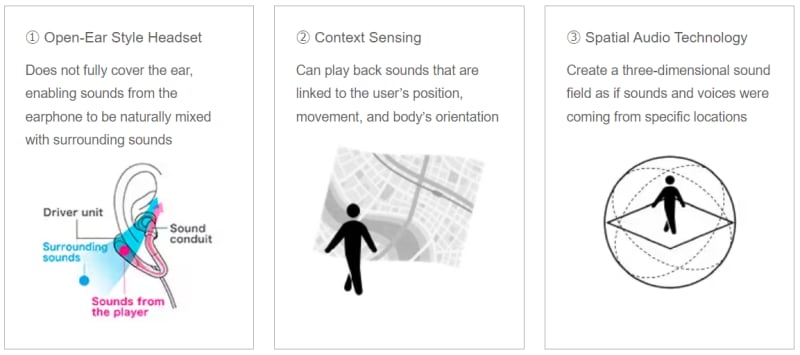

Sound AR has three supporting features. The first is an open-ear style headset so that the sounds from the earphones can be naturally mixed with the surrounding sounds. The second is a system that senses the user's position and movement in real time and plays back the appropriate sounds and voices. Sony Home Entertainment & Sound Products (SHES) has developed this system and created a cloud system that allows creators to place sounds at different points in space. The system automatically reproduces sounds based on the user position estimation and behavior recognition so that they are naturally overlaid on the real world. The third is spatial audio technology that realizes a three-dimensional sound field. The combination of these technologies has created a more immersive experience.

Takashi Kinoshita:In order to continue to provide a consistent sound experience to users moving around the park, SHES focused on the development of technology that accurately identifies the location of the user and stably provides lots of sound content. It has developed a system that allows individual sound content to be placed on a map and plays sounds based on the app's GPS location while communicating in real time. The R&D Center has developed a technology that uses sensors to reproduce footsteps at the moment of landing and an object-based spatial audio technology that works in conjunction with the user's body orientation. By implementing these technologies in the system, we are able to create a variety of scenes.

In fact, we visited the Moominvalley Park and repeatedly checked the GPS positioning and LTE communications by performing actual measurements to find out how to arrange sounds using the current position estimation technology and how much data is appropriate to be transmitted in communications from the cloud. For sensing the landing, we made various efforts to accurately detect the movement of the feet even with the smartphone hanging from the neck, and for spatial audio, we made the interaction design assuming the behavior of an experienced person on the spot. We made repeated adjustments until the presence of characters and scenery was felt realistic.

Yoshihisa Ideguchi:Sony Music Solutions (SMS) has been collaborating since the project was launched to develop Sound AR content. In this demonstration, we had to convey the story and seasonal changes only through the sound, such as expressing the changes in Moomintroll's feelings only with the background music and the sound of the wind, so we focused on selecting songs and producing sound effects. In particular, in the footstep interaction where the user can hear the sound of stepping on snow while walking, not only was the real-time interaction realized in accordance with the walking action, but the sound of footsteps on snowy roads around the world was collected for use. We pursued the pleasant footsteps on the snow that changes from winter to spring.

Tsutomu Fuzawa:In expressing the Moomin world with sound, we incorporated the technology developed by the R&D Center and the know-how and ideas that we have cultivated through the production of game sound. For example, in many of the storytelling scenes, we incorporated the method of utilizing spatial audio technology obtained from the experience prototype created through trial and error, in order to create surround sound, a sense of reality, and a sense of immersion that would not be possible with conventional stereo playback. On the other hand, simply asking users to listen to sounds is not enough as experience. In the game world, the user manipulates the controller to obtain real-time and interactive sound reactions. In the Sound Walk, the user will be a controller through the smartphone in the real world. I mean, the body movements the device senses will be an input, and linking the physical movement of the real world to the sounds of the information world creates a sound AR experience where reality and virtual sounds are seamlessly fused into one.

Relationship between physical movement

and real-time interaction

──What is the relationship between real time and body movement?

Kawakami:As an example of real-time interaction that is familiar to us, I think there is an interaction with the building. A building changes its shape when you approach it, making it look more three-dimensional and show the other side of the building. I think architects design buildings even considering the interaction between people and the building and how to change its view according to the viewpoint. Even buildings, which do not move, can change their appearance through our movement. People always perform real-time interaction in a variety of ways.

Yamamoto:If you change the angle to look at the building, the shape of the building changes. The current AR and VR technology enables the user to experience this phenomenon. The information world copies and transcribes the real world, and real-time response is also important here. I believe that one of the key points is to receive the same amount of information that has changed in the real world in response to human body movement without feeling it is unnatural.

Kawakami:As with the footstep interaction in Sound Walk, it is necessary to accurately capture the user's location and body movement, including when the user changes the direction to look at things, and reflect them in real time without giving unnatural feeling. It is completely different from the world where information is returned simply by typing or talking.

Yamamoto:It usually takes about 100 to 300 milliseconds to push a button and get a response back, but a different latency (delay time) is expected in this interaction. There are differences depending on vision and hearing, and the tolerance varies about 10 times depending on the usage. The experience of hearing music or information when you enter a certain place is completely different from the experience of hearing footsteps as you move, in terms of the latency in the same real time. When it comes to simply providing information, a delay of 10 to 20 seconds may not be much noticed, but a footstep is direct feedback to the action. Since it is the same phenomenon that happens in the real world, if you don't get feedback as quickly as in the real world, you feel very uncomfortable. We need to realize a world where both types of feedback are achieved.

Kawakami:It's true that you feel uncomfortable when there is a delay in response to your movement. Sound AR deals with wearables, so we need to think all the more about human body's systems.

Yamamoto:If the sound is heard with a delay, it can cause a symptom like VR sickness. I tried it myself deliberately delaying the sound so it didn't match my body's response, and it made me feel a little sick.

The future of real-time interaction

──What kind of action do you want to take in the future?

Ito:Over the next 10 years, the roles of cloud computing, AI, and devices will be redefined, and we believe that interactions currently taken for granted may change significantly. If interactions change, the relationship between devices, cloud systems, and AI will change. Content may also change. We believe that the "always-connected environment," where people are constantly connected to the cloud and AI systems, and the "automated environment," in which people can reach content without any operation, will advance in various fields. In the area of sound and hearing, always-on devices that can be worn all the time around the ear will surely emerge, as well as automated content delivery services that will find user's favorite music and sounds autonomously. We believe that it is crucial for Sony to get the right timing when this sound interaction change happens, and we'd like to take various measures not to lose this opportunity.

Kawakami:The sound has a very narrow bandwidth and it won't require a big gimmick in 10 years, so devices will be able to become widespread with low cost. Furthermore, I think devices will evolve with ability to pick up necessary information for users. I hope to see a world where people can enjoy various types of information from the Internet while fully ensuring their privacy.

Yamamoto:We still have no device in the world that can predict what information should be output without clear input. I think we're in the process of inventing a twenty-first century mouse. The current mouse is just a device that indicates the amount of change in coordinates, but it has large power to affect the UI. Our initiative is something similar to that. A world where devices sense physical movement, such as walking and jumping, and return necessary information as feedback without humans being aware of it. We are working on a new initiative in order to set up Sony's presence in such a world. A future mouse may accept one million people's input at a time. I think the UI will change in its structure, so we need to look at the future in building an architecture. I believe our initiative will be an absolutely necessary piece of the IoT and AI megatrends, so if it can be realized, it will allow Sony to lead the market for 10 or 20 years.

Ito:I agree about the mouse story. Sony has missed several opportunities to be the first to cause innovation in interactions, such as the mouse and touch interaction on smartphones. Now, we are focusing on hearing and sound, but innovations in interaction are taking place in various areas at the same time, so I would like to work with our team members to bring out innovation without missing this wave.

Kawakami:Sound is a great medium, but it still receives less focus as an interactive means that could take advantage of technology. Using sound to do the same things as in VR, including images, should create pretty interesting experience, but it doesn't seem to be pursued deeply within the industry. That's why I think it would be a wonderful challenge for a company like Sony, which has cutting-edge technology to produce high-quality sound, to take initiative on this area. We'd like to make every effort looking ahead for the next 10 years.