Cutting Edge

Aiming for VR Capable of Realizing the Impossible

Volumetric Capture Technology That Goes Beyond Omnidirectional Visualization

Jul 31, 2020

Technology to Capture an Entire Space

Volumetric capture technology converts a person, object, or place into 3D digital data and reproduces it as a high-quality image. This is a type of free-viewpoint video technology that captures the entire real world and allows it to be viewed from any viewpoint. In addition to providing a new video experience, it also has potential as a new content generation method. It is expected to break through conventional video production boundaries and find various applications in the entertainment field. We spoke to two engineers from the R&D Center who are involved in the development of algorithms for volumetric capture technology.

Profile

-

Yoichi Hirota

Tokyo Laboratory 09

R&D Center

Sony Corporation -

Hisako Sugano

Tokyo Laboratory 09

R&D Center

Sony Corporation

Expanding from Sports Broadcasting to Entertainment New

and Immersive Video Content

──We heard free-viewpoint video technology is being actively adopted on video production sites.

Yoichi Hirota:Along with progress in virtual reality (VR) technology, free-viewpoint video technology has been used in sports broadcasting for several years and has also recently come into use in the field of video content production. In particular, omnidirectional visualization technology, which allows one to capture 360-degree images, is being supported through the online services provided by various companies, and I am sure there are lots of people who have already experienced it.

With this as a backdrop, movements towards supporting omnidirectional visualization technology have also become more active in integrated video production workflows including imaging, production, transmission, and display. Among other developments towards achieving commercialization, MPEG, a working group that develops standards for video compression, has completed standardization of 360-degree video format. The Omnidirectional Media Format (OMAF), in MPEG-I Part 3, has started being used by creators to produce a variety of new, immersive content. As a result, we are also actively engaging in various initiatives aimed at video production sites.

──What kinds of value can be created through this technology?

Hirota:With the evolution of free-viewpoint technology, we believe that "free-viewpoints" and "reality" will be key elements. The volumetric capture technology that we are currently developing is aimed at going beyond omnidirectional visualization. This technology, which was initially used in sports broadcasting, has now expanded into the entertainment field, and video content creators are recognizing it for the new value it could bring to concerts and commercials. By leveraging Sony’s existing businesses and imaging/video technology assets, we will be able to capture low-cost, high-quality free-viewpoint video, thereby contributing to the creation of a new business that will reach everyone from professionals to ordinary customers.

Volumetric capture technology is expected to be utilized in various fields such as entertainment and sports

Creating the Feeling That You Are Actually There

──What kinds of issues are there?

Hirota:With the current omnidirectional visualization technology, you can view 360 degrees around you from a certain viewpoint by wearing a headset and moving your head to look around. However, it is not possible to move around objects and view them from behind. This is the major difference from VR content created with CG. A more flexible point of view will be essential for immersing users in VR environments. In order to provide an immersive experience, it will also be necessary to improve the basic qualities of the video itself such as the resolution and framerate. This in turn will require the handling of larger amounts of data, so I believe we have a lot of work to do on both the video and display sides.

──How will you go about resolving these issues?

Hisako Sugano:With free-viewpoint video technology, we generate 3D models by placing multiple cameras around a subject and shooting. Volumetric capture technology’s biggest feature is the ability to generate an image from a virtual viewpoint where there was no actual camera, by using the captured 3D models. Creating a virtual viewpoint involves a process of calibrating multiple cameras, generating 3D models of subjects, texture mapping to 3D models, and generating camerawork. In contrast to bullet-time video where a huge number of cameras are lined up closely next to each other, we create 3D digital data from sparsely distributed cameras to generate videos, allowing video creators and viewers to freely and interactively manipulate their viewpoints.

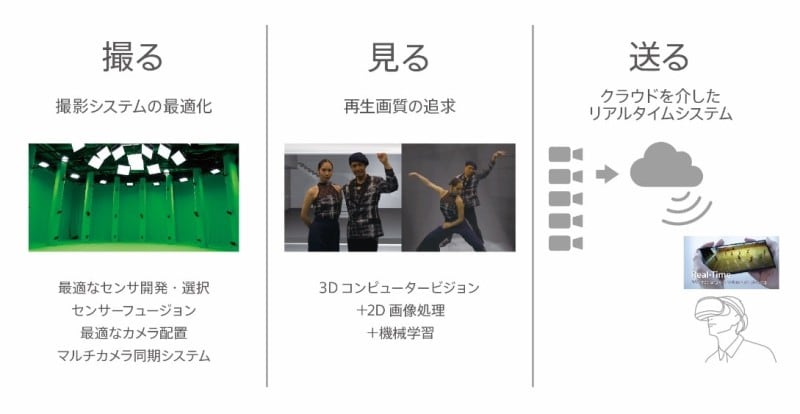

Hirota:Currently, we are carrying out technology development focused on capturing, displaying, and transporting. It could be said that Sony’s unique strength is to realize new values by bringing together all of these three areas.

Gathering Together All of

Sony’s Image Signal Processing Technologies

──We would like to ask about the core technologies. What kinds of technology are involved in capturing?

Hirota:Naturally, the optimal shooting system depends on what the subject is. There are some purely academic examples of huge systems comprising several hundred cameras, but this is not very realistic from a business point of view. Shooting systems using the current volumetric capture technology are specifically for filming relatively small areas, and the subject is more or less required to stay in one place. As a result, they are not particularly well suited to capturing music artists moving around, or large numbers of people performing simultaneously.

In contrast, our shooting system targeted for the entertainment industry is unique in a way that it can capture relatively large areas with one or more people walking around, dancing, or doing some other kinds of performances. In order to enhance this even further, we have continuously carried out development and proof of concept trials on the sensors and lenses, systems for synchronizing multiple cameras, camera and light arrangements, and materials for chromakey backgrounds.

──We heard that you have opened a new shooting studio!

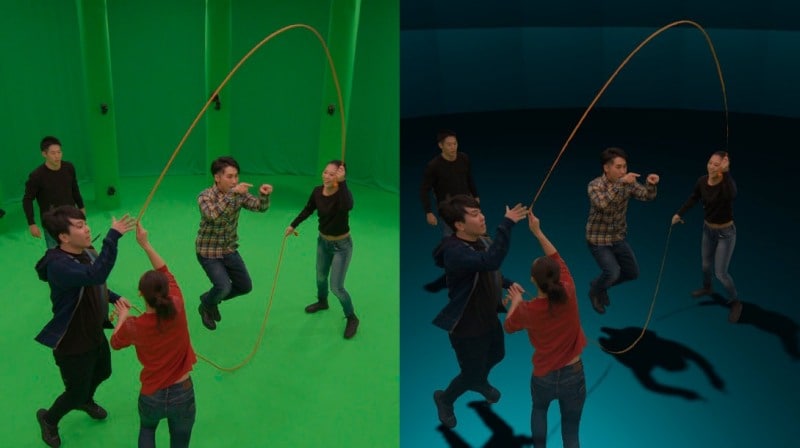

Hirota:That’s right. We opened one of the largest volumetric capture studios in Japan, at Sony’s headquarters in January 2020, complete with the technologies we have accumulated. The first thing we shot in this studio was a game of Double Dutch, which involves two skipping ropes swung in opposite directions. A game of Double Dutch with five people was optimal for making maximum use of the studio’s unique 5-meter shooting area. The thin ropes moving at high speeds meant that filming and signal processing were challenging, but the shooting was a great success. We were able to demonstrate the technology’s potential not only for visual expressions but also for sports analysis. Moving forward, we are aiming to accelerate technology development and business verification in collaboration with parties inside and outside the company.

Shooting at one of Japan’s largest photography studios at Sony’s headquarters (In cooperation with professional Double Dutch team, J-TRAP.)

──What sorts of challenges do you face with capturing?

Hirota:Synchronizing multiple cameras is where the challenge of volumetric capture technology comes into play. All the cameras need to shoot at exactly the same time and transfer/aggregate the images to make them 3D. As a result, we have been developing and evaluating our hardware and software such as through the introduction of imaging sensors with global shutters, ways of distributing signals simultaneously to each camera, and methods of resynchronizing the cameras when aggregating the data.

Pursuing Playback Quality and Producing More Realistic Images

──What sorts of challenges do you face with displaying?

Hirota:With volumetric capture technology, it is necessary to use 3D computer vision to create virtual viewpoints where there are no cameras—this is the rendering process. The problem here is the sense of unnaturalness that people sometimes refer to as the "uncanny valley." Sony has solved this problem by combining its advanced 2D image processing technology with machine learning technology. These might be manufactured images, but it is the image quality on the final display that is ultimately important.

Sugano:We are now using more than four times the number of cameras we were using at the beginning, and with the resolutions of display devices evolving from 2K to 4K and 8K, it has become possible to produce more realistic images. The range of movement and number of subjects that can be captured has also improved greatly since the development began.

Hirota:The videos that we have realized, which are so high quality you would think they had really been taken with a camera from your viewpoint, have quite clearly surpassed the volumetric capture technology available until this point, and have been extremely well received by a number of creators. We will continue to focus on differentiating our technology not only by improving image quality, but also by pursuing greater ease of use.

Comparison of video from a standard camera on the left, and that taken with our volumetric capture technology on the right

──What sorts of challenges do you face with transporting?

Hirota:Another advantage of our system is that it can carry out everything from capturing to distributing content in real-time. The amount of uncompressed data shot by a large number of cameras can reach up to 100 GB/sec, so at present, it is not realistic to process this on local computers. In order to flexibly ensure powerful computing resources, we developed a unique cloud processing system that is highly expandable. By doing so, it is now possible for users to freely choose viewing angles of an artist’s broadcasted live performance in real-time and enjoy further kinds of interactions. It is also expected to be used for next-generation methods of communication.

──We heard you collaborated with Sony Music Entertainment in the entertainment field.

Hirota:Through this volumetric capture technology, we are now able to capture people and places as 3D data, and reproduce them as high quality images. As for how we will use this technology, we have been working with Sony Music Entertainment Japan (SMEJ), who help us out by offering places for proof of concept, for example. Being able to try out new uses and develop the technology alongside those in the entertainment field is surely one of Sony’s biggest strengths.

Sugano:When our content was projected on a huge screen at the concert venue, we could feel a welling-up of emotion in the crowd. At the same time, it was a chance for us to see the work of professional artists up close, and that inspired us to work even harder. I was extremely moved to see the technology that we had developed used in a collaboration with the world of entertainment. When I checked Twitter after the concert and the television broadcast, there were lots of people asking about the background video and how we had managed to get it to spin the way it did, and it made me really happy.

──Please tell us about the things that you would like to realize and achieve in the future.

Sugano:Our research group continually carries out R&D to achieve our mission to “provide technology that allows the manipulation (capture, display, and transport) of 3D spaces through the complete digitalization of real spaces.” The thing we are putting most effort into is the real-time distribution of free-viewpoint videos. Previous free-viewpoint technology also allowed transferring recorded content, but what we are trying to achieve in the future is the ability to see and talk to subjects in a remote location while freely changing the viewpoint in real-time. After this, we want to create a video experience that would allow users to share a space remotely, interact with each other, and really feel as if the other person was there with them.

Hirota:5G technology which can provide high-capacity communications is beginning to roll out, and we are nearing an era where VR content is something anyone can experience. When technology in various areas reaches maturity, we will naturally and freely be able to capture and share experiences in 3D, just as we do now with 2D images and videos.

The technology is currently under consideration for application to AR content using smartphones